sklearn包中的K-Means算法 1. 函数:sklearn.cluster.``KMeans

*class *sklearn.cluster.``KMeans(n_clusters=8 , init=’k-means++’ , n_init=10 , max_iter=300 , tol=0.0001 , precompute_distances=’auto’ , verbose=0 , random_state=None , copy_x=True , n_jobs=None , algorithm=’auto’ )

主要参数

n_clusters:要进行的分类的个数,即上文中k值,默认是8

init:‘k-means++’, ‘random’ or an ndarray

‘k-means ++’:使用k-means++算法,默认选项

‘random’:从初始质心数据中随机选择k个观察值

第三个是数组形式的参数

n_init :使用不同的初始化运行算法的次数

max_iter :最大迭代次数。默认300

random_state: 设置某个整数使得结果固定

n_jobs: 设置并行量 (-1表示使用所有CPU)

主要属性:

cluster_centers_ :集群中心的坐标

labels_ : 每个点的标签

官网示例:

1 2 3 4 5 6 7 8 9 10 11 12 >>> from sklearn.cluster import KMeans >>> import numpy as np >>> X = np.array([[1, 2], [1, 4], [1, 0], ... [10, 2], [10, 4], [10, 0]]) >>> kmeans = KMeans(n_clusters=2, random_state=0).fit(X) >>> kmeans.labels_ array([1, 1, 1, 0, 0, 0], dtype=int32) >>> kmeans.predict([[0, 0], [12, 3]]) array([1, 0], dtype=int32) >>> kmeans.cluster_centers_ array([[10., 2.], [ 1., 2.]])

5. My code:

1 2 3 4 5 6 7 8 9 10 %matplotlib inline import matplotlib.pyplot as plt from sklearn.cluster import KMeans import numpy as np X = np.array([[1, 2], [1, 4], [1, 0], [4, 2], [4, 4], [4, 0]]) plt.plot(X[:,0],X[:,1],'x') kmeans = KMeans(n_clusters=2).fit(X) print(kmeans.labels_) print(kmeans.predict([[0, 0], [4, 4]])) print(kmeans.cluster_centers_)

聚类算法衡量指标 1. 函数:sklearn.metrics.``silhouette_score

sklearn.metrics.``silhouette_score(X , labels , metric=’euclidean’ , sample_size=None , random_state=None , **kwds )

实例:

https://scikit-learn.org/stable/auto_examples/cluster/plot_kmeans_digits.html#sphx-glr-auto-examples-cluster-plot-kmeans-digits-py

sklearn包中的K-Means算法 1. 函数:sklearn.mixture.``GaussianMixture

sklearn.mixture.``GaussianMixture(n_components=1 , covariance_type=’full’ , tol=0.001 , reg_covar=1e-06 , max_iter=100 , n_init=1 , init_params=’kmeans’ , weights_init=None , means_init=None , precisions_init=None , random_state=None , warm_start=False , verbose=0 , verbose_interval=10 )

实例:

https://scikit-learn.org/stable/auto_examples/mixture/plot_gmm_covariances.html#sphx-glr-auto-examples-mixture-plot-gmm-covariances-py

https://scikit-learn.org/stable/auto_examples/mixture/plot_gmm_selection.html#sphx-glr-auto-examples-mixture-plot-gmm-selection-py

聚类结果可视化 参考代码:A demo of K-Means clustering on the handwritten digits data np.random.randint、np.random.choice、random.sample三种随机函数的用法案例

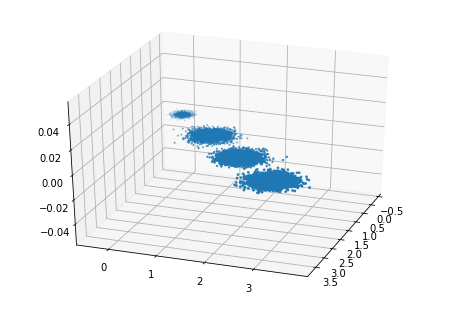

先聚类后PCA 直接对原始的数据进行聚类,结果如下:

Weighted k-means 第一个方法 Clustering the US population: observation-weighted k-means github-observation_weighted_kmeans

这是直接对data加权值,有对应的代码,但是数据结构用的是它自己的project,需要改写。

第二个方法:weighted kernel kmeans WEIGHTED KERNEL K-MEANS 加权核K均值算法理解及其实现(一) tslearn.clustering.GlobalAlignmentKernelKMeans

Reference [1]Weighted Graph Cuts without Eigenvectors:A Multilevel Approach

[2]Kernel k-means, Spectral Clustering and Normalized Cuts

聚类算法一览 https://www.cnblogs.com/lc1217/p/6893924.html

https://www.cnblogs.com/lc1217/p/6908031.html

https://www.cnblogs.com/lc1217/p/6963687.html

GMM vs K-means https://www.jianshu.com/p/a4d8fa39c762

https://www.jianshu.com/p/13898e68c5c6

sklearn https://scikit-learn.org/stable/modules/generated/sklearn.cluster.KMeans.html

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.silhouette_score.html

https://scikit-learn.org/stable/auto_examples/cluster/plot_kmeans_digits.html#sphx-glr-auto-examples-cluster-plot-kmeans-digits-py

https://scikit-learn.org/stable/modules/generated/sklearn.mixture.GaussianMixture.html

https://scikit-learn.org/stable/auto_examples/mixture/plot_gmm_selection.html#sphx-glr-auto-examples-mixture-plot-gmm-selection-py